Identity in Microservices

A view on Service-to-Service Authentication Patterns

Microservices architectures promise scalability and flexibility, but they transform security from a single perimeter problem into a distributed trust challenge. When your application becomes dozens of services communicating across network boundaries, every inter-service call becomes a potential attack vector.

Unlike monolithic applications where components communicate through in-memory calls or local databases, microservices must authenticate each request over the network. A compromised service can potentially impersonate others, access unauthorised data, or pivot through your entire system. Traditional approaches like shared secrets or IP-based trust fall apart at scale.

The stakes are higher than inconvenience — service identity failures can lead to data breaches, privilege escalation, and compliance violations. Consider an e-commerce platform where the recommendation service gains unauthorised access to payment processing, or a healthcare system where diagnostic services can access patient records beyond their authorisation scope.

This article examines three battle-tested patterns for securing service-to-service communication: mutual TLS (mTLS) for cryptographic identity verification, Service Mesh Identity for infrastructure-managed authentication, and Token Relay patterns for propagating user context through service chains. Each approach addresses different architectural needs and operational constraints.

Identity in Microservices: Service-to-Service Authentication Patterns

Microservices architectures promise scalability and flexibility, but they transform security from a single perimeter problem into a distributed trust challenge. When your application becomes dozens of services communicating across network boundaries, every inter-service call becomes a potential attack vector.

Unlike monolithic applications where components communicate through in-memory calls or local databases, microservices must authenticate each request over the network. A compromised service can potentially impersonate others, access unauthorised data, or pivot through your entire system. Traditional approaches like shared secrets or IP-based trust fall apart at scale.

The stakes are higher than inconvenience — service identity failures can lead to data breaches, privilege escalation, and compliance violations. Consider an e-commerce platform where the recommendation service gains unauthorised access to payment processing, or a healthcare system where diagnostic services can access patient records beyond their authorisation scope.

This article examines three battle-tested patterns for securing service-to-service communication: mutual TLS (mTLS) for cryptographic identity verification, Service Mesh Identity for infrastructure-managed authentication, and Token Relay patterns for propagating user context through service chains.

Mutual TLS (mTLS): Cryptographic Service Identity

Mutual TLS extends standard TLS by requiring both client and server to present valid certificates, creating bidirectional authentication. In microservices architectures, this means every service acts as both client and server, presenting its identity certificate for each connection.

mTLS Implementation Architecture

# Certificate Authority Structure

Root CA

├── Intermediate CA (Infrastructure)

│ ├── Service A Certificate

│ ├── Service B Certificate

│ └── API Gateway Certificate

└── Intermediate CA (External)

└── Client CertificatesThe mTLS handshake process involves six distinct steps:

Client initiates connection with ClientHello message

Server presents certificate containing its public key and identity claims

Client verifies server certificate against trusted CA bundle

Server requests client certificate via CertificateRequest message

Client presents certificate and signs a challenge with its private key

Server verifies client certificate and establishes encrypted channel

Practical mTLS Implementation

Here’s how to implement mTLS in a Go microservice:

package mainimport (

"crypto/tls"

"crypto/x509"

"fmt"

"io/ioutil"

"net/http"

)func setupMTLSServer() *http.Server {

// Load server certificate and key

serverCert, err := tls.LoadX509KeyPair("server.crt", "server.key")

if err != nil {

panic(err)

}

// Load CA certificate for client verification

caCert, err := ioutil.ReadFile("ca.crt")

if err != nil {

panic(err)

}

caCertPool := x509.NewCertPool()

caCertPool.AppendCertsFromPEM(caCert)

tlsConfig := &tls.Config{

Certificates: []tls.Certificate{serverCert},

ClientAuth: tls.RequireAndVerifyClientCert,

ClientCAs: caCertPool,

MinVersion: tls.VersionTLS12,

CipherSuites: []uint16{

tls.TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,

tls.TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,

},

}

return &http.Server{

Addr: ":8443",

TLSConfig: tlsConfig,

}

}func setupMTLSClient() *http.Client {

clientCert, err := tls.LoadX509KeyPair("client.crt", "client.key")

if err != nil {

panic(err)

}

caCert, err := ioutil.ReadFile("ca.crt")

if err != nil {

panic(err)

}

caCertPool := x509.NewCertPool()

caCertPool.AppendCertsFromPEM(caCert)

tlsConfig := &tls.Config{

Certificates: []tls.Certificate{clientCert},

RootCAs: caCertPool,

MinVersion: tls.VersionTLS12,

}

return &http.Client{

Transport: &http.Transport{

TLSClientConfig: tlsConfig,

},

}

}Certificate Lifecycle Management

The biggest operational challenge with mTLS is certificate management at scale. Consider these automation strategies:

Automated Certificate Provisioning:

# cert-manager Kubernetes integration

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: service-a-cert

namespace: production

spec:

secretName: service-a-tls

issuerRef:

name: internal-ca-issuer

kind: ClusterIssuer

commonName: service-a.production.svc.cluster.local

dnsNames:

- service-a.production.svc.cluster.local

duration: 720h # 30 days

renewBefore: 240h # Renew 10 days before expiryCertificate Rotation Strategy:

Implement overlapping validity periods (old and new certificates valid simultaneously)

Use certificate serial numbers or thumbprints for cache invalidation

Monitor certificate expiry across all services with alerting

mTLS Performance Considerations

mTLS introduces latency overhead, particularly during the initial handshake:

Typical mTLS Handshake Overhead:

- Additional RTT for certificate exchange: 1-2ms

- Certificate validation: 0.5-1ms

- Cryptographic operations: 0.1-0.5ms

Total: ~2-4ms per new connectionMitigation strategies include:

Connection pooling to amortise handshake costs

Session resumption using TLS session tickets

Certificate caching to avoid repeated validation

Hardware acceleration for cryptographic operations

When to Choose mTLS

Ideal scenarios:

High-security environments (financial services, healthcare)

Compliance requirements mandating cryptographic authentication

Services with predictable, long-lived connections

Infrastructure you fully control (no third-party dependencies)

Avoid mTLS when:

Rapid service deployment cycles make certificate management burdensome

Performance requirements are extremely stringent

Third-party services don’t support client certificates

Development teams lack PKI expertise

Service Mesh Identity: Infrastructure-Managed Security

Service meshes abstract away the complexity of mTLS by handling certificate provisioning, rotation, and policy enforcement at the infrastructure layer. Popular implementations include Istio, Linkerd, and Consul Connect.

SPIFFE/SPIRE Identity Framework

Most service meshes implement the SPIFFE (Secure Production Identity Framework for Everyone) specification:

# SPIFFE Identity Document (SVID)

{

"sub": "spiffe://example.com/payment-service",

"aud": ["spiffe://example.com/api-gateway"],

"exp": 1640995200,

"iat": 1640908800,

"spiffe_id": "spiffe://example.com/payment-service",

"workload_selectors": {

"k8s:deployment": "payment-service",

"k8s:namespace": "production"

}

}Istio Service Mesh Implementation

Here’s how Istio handles service identity and mTLS:

# Automatic mTLS enforcement

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: default

namespace: production

spec:

mtls:

mode: STRICT

---

# Fine-grained authorization policy

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: payment-service-policy

namespace: production

spec:

selector:

matchLabels:

app: payment-service

rules:

- from:

- source:

principals: ["cluster.local/ns/production/sa/api-gateway"]

- to:

- operation:

methods: ["POST"]

paths: ["/payments/*"]

when:

- key: request.headers[user-role]

values: ["admin", "payment-processor"]Sidecar Proxy Architecture

Service meshes deploy sidecar proxies (typically Envoy) alongside each service:

┌─────────────────┐ ┌─────────────────┐

│ Service A │ │ Service B │

│ │ │ │

│ ┌─────────────┐ │ │ ┌─────────────┐ │

│ │ App Process │ │ │ │ App Process │ │

│ └─────────────┘ │ │ └─────────────┘ │

│ ┌─────────────┐ │ │ ┌─────────────┐ │

│ │Envoy Sidecar│◄┼────┼►│Envoy Sidecar│ │

│ └─────────────┘ │ │ └─────────────┘ │

└─────────────────┘ └─────────────────┘

│ │

└────── mTLS Tunnel ──────┘The sidecar handles:

Certificate management: Automatic provisioning and rotation

Traffic interception: Transparent proxy for all network communications

Policy enforcement: Authorization rules and traffic routing

Observability: Metrics, tracing, and access logs

Service Mesh Performance Impact

Sidecar proxies introduce resource overhead:

Typical Resource Overhead:

- Memory: 50-100MB per sidecar

- CPU: 0.1-0.2 cores per sidecar

- Network latency: 0.5-2ms additional hop

- Throughput impact: 5-15% reductionOptimisation strategies:

Resource limits tuned to workload requirements

Proxy configuration optimised for specific traffic patterns

Selective deployment only for services requiring mesh features

Hardware acceleration for cryptographic operations

Service Mesh Decision Matrix

Choose service mesh when:

Operating at significant scale (50+ services)

Requiring consistent security policies across services

Teams lack deep networking/security expertise

Compliance requires comprehensive audit trails

Need advanced traffic management (canary deployments, circuit breaking)

Avoid service mesh when:

Simple architectures with few services

Extremely latency-sensitive applications

Limited operational resources for mesh management

Services primarily communicate with external systems

Token Relay Pattern: Propagating User Context

Token relay patterns maintain user context across service boundaries by forwarding authentication tokens through the service call chain. This approach combines service authentication with user authorisation.

JWT-Based Implementation

// API Gateway - Initial token validation and relay

const jwt = require('jsonwebtoken');

const axios = require('axios');class APIGateway {

async authenticateAndRelay(req, res) {

try {

// Validate incoming JWT

const token = req.headers.authorization?.replace('Bearer ', '');

const decoded = jwt.verify(token, process.env.JWT_SECRET);

// Enrich token with service-specific claims

const serviceToken = jwt.sign({

...decoded,

iss: 'api-gateway',

aud: 'internal-services',

service_chain: ['api-gateway'],

request_id: req.headers['x-request-id']

}, process.env.SERVICE_JWT_SECRET, {

expiresIn: '5m' // Short-lived for internal use

});

// Forward to downstream service

const response = await axios.post(

'https://payment-service/process',

req.body,

{

headers: {

'Authorization': `Bearer ${serviceToken}`,

'X-Original-Token': token,

'X-Request-ID': req.headers['x-request-id']

}

}

);

res.json(response.data);

} catch (error) {

res.status(401).json({ error: 'Authentication failed' });

}

}

}Service-to-Service Token Validation

package mainimport (

"context"

"fmt"

"net/http"

"strings"

"time"

"github.com/golang-jwt/jwt/v4"

)type ServiceClaims struct {

UserID string `json:"user_id"`

Roles []string `json:"roles"`

ServiceChain []string `json:"service_chain"`

RequestID string `json:"request_id"`

jwt.RegisteredClaims

}func validateServiceToken(tokenString string) (*ServiceClaims, error) {

token, err := jwt.ParseWithClaims(tokenString, &ServiceClaims{}, func(token *jwt.Token) (interface{}, error) {

if _, ok := token.Method.(*jwt.SigningMethodHMAC); !ok {

return nil, fmt.Errorf("unexpected signing method: %v", token.Header["alg"])

}

return []byte(os.Getenv("SERVICE_JWT_SECRET")), nil

})

if err != nil {

return nil, err

}

if claims, ok := token.Claims.(*ServiceClaims); ok && token.Valid {

// Validate audience and issuer

if !claims.VerifyAudience("internal-services", true) {

return nil, fmt.Errorf("invalid audience")

}

// Check service chain for loop detection

if contains(claims.ServiceChain, "payment-service") {

return nil, fmt.Errorf("circular service call detected")

}

return claims, nil

}

return nil, fmt.Errorf("invalid token")

}func authMiddleware(next http.HandlerFunc) http.HandlerFunc {

return func(w http.ResponseWriter, r *http.Request) {

authHeader := r.Header.Get("Authorization")

if authHeader == "" {

http.Error(w, "Missing authorization header", http.StatusUnauthorized)

return

}

tokenString := strings.TrimPrefix(authHeader, "Bearer ")

claims, err := validateServiceToken(tokenString)

if err != nil {

http.Error(w, "Invalid token: "+err.Error(), http.StatusUnauthorized)

return

}

// Add claims to request context

ctx := context.WithValue(r.Context(), "claims", claims)

next.ServeHTTP(w, r.WithContext(ctx))

}

}Token Security Considerations

Token Scoping: Implement different token types for different contexts:

{

"user_token": {

"sub": "user123",

"aud": ["web-app"],

"scope": "read:profile write:profile",

"exp": 3600

},

"service_token": {

"sub": "service:api-gateway",

"aud": ["internal-services"],

"scope": "service:call",

"exp": 300,

"service_chain": ["api-gateway"]

}

}Token Refresh Strategy: Implement sliding window refresh for long-running operations:

class TokenManager:

def __init__(self, refresh_threshold=300): # 5 minutes

self.refresh_threshold = refresh_threshold

async def get_valid_token(self, current_token):

try:

payload = jwt.decode(current_token, verify=False)

exp = payload.get('exp', 0)

# Refresh if token expires within threshold

if exp - time.time() < self.refresh_threshold:

return await self.refresh_token(current_token)

return current_token

except:

# Token invalid, request new one

return await self.authenticate()Token Relay Performance Optimization

Token Caching Strategy:

import redis

from datetime import timedeltaclass TokenCache:

def __init__(self):

self.redis_client = redis.Redis(host='localhost', port=6379, db=0)

def cache_token(self, user_id, token, expiry):

# Cache with 90% of actual expiry to ensure validity

cache_expiry = int(expiry * 0.9)

self.redis_client.setex(

f"token:{user_id}",

cache_expiry,

token

)

def get_cached_token(self, user_id):

cached_token = self.redis_client.get(f"token:{user_id}")

if cached_token:

# Validate token is still usable

if self.validate_token_locally(cached_token):

return cached_token.decode('utf-8')

return NoneWhen to Use Token Relay

Ideal scenarios:

Need to maintain user context across service boundaries

Implementing fine-grained, user-specific authorization

Services require different permissions based on user roles

Audit trails must track user actions across services

Avoid token relay when:

Service-to-service calls don’t require user context

Extremely high-throughput scenarios where token overhead matters

Services are purely internal with no user-facing authorization requirements

Hybrid Approaches and Decision Framework

Real-world systems often combine multiple patterns. Consider this hybrid architecture:

Internet → [API Gateway] → [Service Mesh] → [Internal Services]

↓ ↓ ↓

JWT Validation mTLS + Identity Token + mTLS

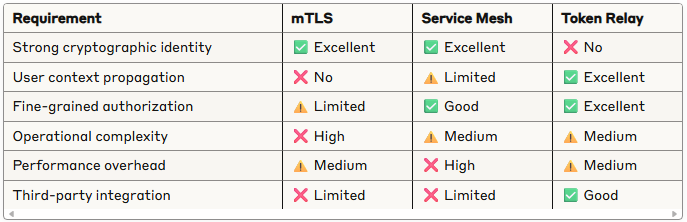

Token Relay Service Policies AuthorizationDecision Matrix

Implementation Roadmap

Phase 1: Foundation (Months 1–2)

Implement token relay for user-facing services

Establish certificate authority for internal services

Deploy basic mTLS between critical services

Phase 2: Scale (Months 3–4)

Evaluate service mesh for complex deployments

Implement automated certificate management

Add comprehensive monitoring and alerting

Phase 3: Optimization (Months 5–6)

Fine-tune performance based on production metrics

Implement advanced authorization policies

Establish security incident response procedures

Monitoring and Observability

Effective monitoring is crucial for service identity systems:

# Key metrics to track

authentication_requests_total:

- Counter of authentication attempts by service, method, result

authentication_duration_seconds:

- Histogram of authentication latency by method

certificate_expiry_days:

- Gauge of days until certificate expiry by service

token_validation_errors_total:

- Counter of token validation failures by error type

service_mesh_connection_failures_total:

- Counter of mTLS connection failures by source/destinationTLDR;

Service-to-service authentication in microservices requires careful consideration of security requirements, operational complexity, and performance constraints. mTLS provides the strongest cryptographic guarantees but demands sophisticated certificate management. Service meshes simplify operations while introducing infrastructure complexity. Token relay patterns excel at maintaining user context but require careful token lifecycle management.

The most successful implementations combine multiple patterns strategically: using service meshes for infrastructure security, token relay for user context, and selective mTLS for high-security communications. Start with simpler approaches and evolve toward more sophisticated patterns as your architecture and operational capabilities mature.

Remember that security is not a one-time implementation but an ongoing process. Regular security reviews, certificate rotation, policy updates, and incident response procedures are essential for maintaining secure service-to-service communication at scale.